UCSD’s Timothy O’Connor and Darren Lipomi have developed The Language of Glove — glove that wirelessly translates American Sign Language into text, and controls a virtual hand to mimic sign language gestures. It was built for less than $100 using stretchable and printable electronics.

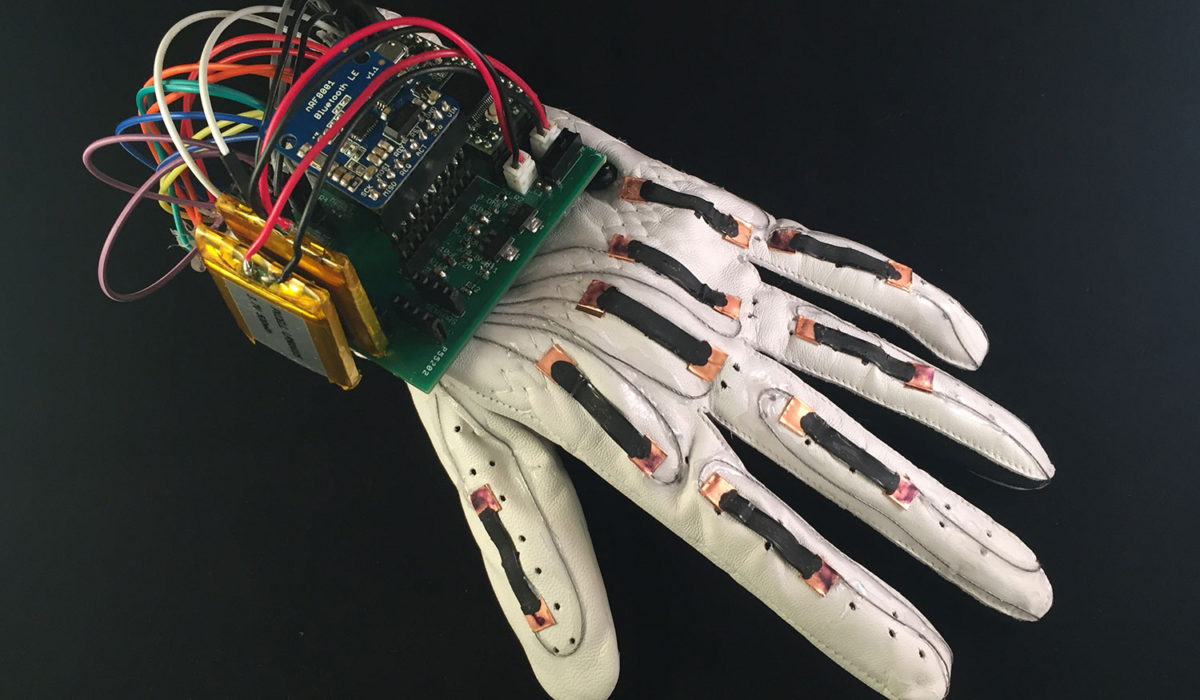

Nine silicon-based polymer sensors, with a conductive carbon paint, were taped to the back and knuckles of a leather glove. They were secured with copper tape, and stainless steel thread connected them to a low power, custom-printed circuit board, at the back of the wrist.

The sensors changed their electrical resistance when stretched or bent. This allowed them to code different letters of the American Sign Language alphabet, based on the positions of all nine knuckles. The circuit board converted the code into letters, and transmitted the signals via Bluetooth to a phone or computer.

The team is investigating other uses for the glove, including VR, AR, telesurgery, technical training, and defense. The next version is intended to have a sense of touch.

Join ApplySci at Wearable Tech + Digital Health + NeuroTech Boston on September 19, 2017 at the MIT Media Lab – featuring Joi Ito – Ed Boyden – Roz Picard – George Church – Nathan Intrator – Tom Insel – John Rogers – Jamshid Ghajar – Phillip Alvelda – Michael Weintraub – Nancy Brown – Steve Kraus – Bill Geary – Mary Lou Jepsen – Daniela Rus

Registration rates increase Friday, July 28th.

ANNOUNCING WEARABLE TECH + DIGITAL HEALTH + NEUROTECH SILICON VALLEY – FEBRUARY 26 -27, 2018 @ STANFORD UNIVERSITY